Just last year, organizations were dropping their writers like hot potatoes to generate free content using AI tools. Entire marketing departments were getting the old heave-ho in favor of ChatGPT, Claude, Perplexity, and other generative AI.

The love affair was brief.

It didn’t take long to realize AI can’t replace skilled human writers. They began to welcome their writers and marketing teams back with open arms – with one caveat. All writing must pass AI content detectors.

Most writers would laugh if the pressure to prove they’re human wasn’t wreaking havoc on their livelihoods. As it were, most of them are crying over the situation.

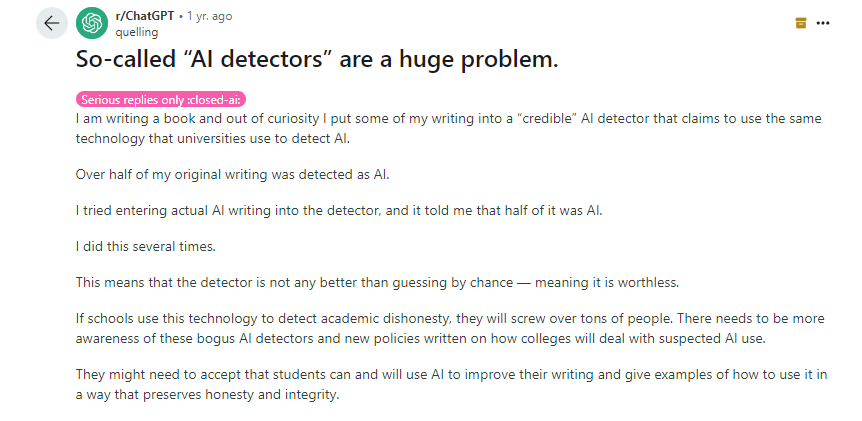

Social media is full of writing professionals pulling their collective hair out over AI detection tools that are anything but accurate.

Maritapm, a senior copywriter with a software development company, expressed frustration with these inaccurate tools on a copywriting subreddit. The marketing manager required the entire department to use an AI content detector to prove their work was human-created. The tool repeatedly indicated that Maritapm’s work was AI-generated even though it wasn’t true.

“So, today, my team lead scheduled a call with me and told me that the manager gave her a …oh god… ‘humanizer tool.’ I’m crying…It’s another tool that…humanizes the AI-generated content to bypass the AI content detectors. What is this…what am I doing?”

What the heck are AI content detectors?

AI content detectors identify whether content was created using artificial intelligence text generators like ChatGPT. These tools analyze linguistic patterns and sentence structures to make their determination. Their popularity surged after AI tools became available to the public, and some organizations used them to crank out content quickly and cheaply.

Businesses turn to these AI content detectors because they think they’re a convenient way to determine the originality and quality of the written materials they solicit from in-house writing teams and subcontracted writing professionals.

The technology that makes AI detectors tick

The same technology that gave us ChatGPT and other Language Learning Models (LLMs) created these AI content detectors that claim to know whether words were written by a human or AI. It relies heavily on machine learning (ML) and deep learning (DL) – a more advanced form of ML that uses neural networks with multiple layers to process and learn from large volumes of data.

Engaging in ML and DL allows these AI detector tools to identify patterns and characteristics typical of AI-generated content, including repetitiveness and lack of semantic depth.

AI content detectors use two critical metrics – perplexity and burstiness – to determine whether writing is human or AI-generated. Perplexity is just a fancy way of saying these tools measure the predictability of the text. A lower perplexity score increases the likelihood that AI was used. Burstiness evaluates sentence structure and length variation. Less variation is a hallmark of AI.

How accurate are AI content detectors

Complaints abound online about the ineptitude of AI content detectors. The consensus among professional writers is that AI has significant limitations and is, quite frankly, making their lives a living hell.

Grace Leonne, Head Writer and Editor for GradSimple analyzed results from seven of the most popular AI detection tools. She put their ability to consistently identify AI usage to the test using a comprehensive methodology with varied writing prompts designed to mimic common scenarios.

She discovered publicly available AI detectors offered “extremely varied levels of AI detection, and the results of this test suggest they should not be used as sources of truth for accurate results.”

Researchers warn that AI detectors can’t guarantee 100% accuracy (or anywhere close to it) because they’re based on probabilities. Some even warn that AI detectors are biased against non-native English writers.

The takeaway? Use AI detection tools with a grain of salt.

Can clients use AI content detectors to refuse payment?

One of the biggest complaints from writing professionals is dealing with clients using these tools to justify non-payment for services.

The first time it happened to Dianna Mason, founder of Acadia Content Solutions, was in March 2023. Mason specializes in legal content and works almost exclusively with clients in the legal industry. “I hadn’t even experimented with ChatGPT and had barely heard of it,” she said. “I had a client send back a draft telling me it was flagged as ChatGPT-generated.”

Mason knew she hadn’t used AI to create the content but was dealing with a client who refused to accept – or pay – for the copy until it passed an AI detector tool. Every time she tried to rewrite it the copy was flagged as AI-generated. “At that point, I was losing money because it was taking me so long with all these rewrites that kept getting tagged.”

The client – one she had worked with many times before – terminated their contract with her because they didn’t believe she wasn’t using AI. “That’s the worst thing that’s ever happened to me,” Mason said. “It was soul-crushing.”

It wasn’t the first or last time Mason would struggle to prove she hadn’t used AI to write. She fired a couple of her clients because they kept telling her that her content was flagged as AI. “Once I even took video of myself showing my writing process and how I made changes – and they were still saying it was flagged as AI. The rub is these clients go and use AI after they fire me for supposedly using AI.”

Legal implications of using AI detection tools to deny payment

Scrutiny of the content creation process continues to grow as AI becomes more popular. AI detection tools are one resource organizations use to evaluate the quality – and humanness – of writing projects. However, the legal implications of refusing payment based solely on their assessment can negatively impact clients.

The most obvious legal ramification falls under a breach of contract. Unless the contract specifically agrees to AI content checker use, the client can’t use that as justification to avoid payment for deliverables.

Breaching contractual terms won’t end well for clients if a writer pursues legal action. There’s also the issue of keeping and using the content while refusing to pay. In the U.S., content creators hold the copyright for their works until compensated for their use. So, not only could a client be sued for breach of contract, but they also might face additional copyright infringement claims.

Mason said her client contracts include a clause that says she doesn’t use AI and she prohibits clients from using AI detectors to refuse to pay her for content. She also requires upfront payment to further protect herself.

Best practices for writers and clients

Jason Hewett, an SEO content writer based in New York, said he’s never had a client use an AI detector retroactively. However, once a prospective client says they plan to use one, he refuses to work with them. “Instead of whether a writer has used AI, what they should ask is whether the submitted work follows their tone of voice … whether it’s factually accurate. But you can’t tell writers not to use it,” said Hewett.

Some of the suggested best practices for writers who use AI include:

- Use AI as a complement, not a replacement. Hewett said he uses AI to brainstorm ideas and create content outlines.

- Fact-check and edit all AI-generated content. “I always, always fact-check,” said Hewett. Writers who use AI should follow his lead because AI is known to hallucinate and even cite sources that don’t contain the information it claims.

- Maintain brand voice. AI-generated content serves as a starting point. Always refine its output to match a brand’s style and tone of voice.

Writers who follow best practices for AI content creation tools should have discretion on its use for clients, Hewett argued. The only exception is if doing so would compromise sensitive or proprietary information. “I respect those kinds of policies when it’s for companies that need to protect data,” he said.

How do content agencies cope with AI detection tools?

Allen Watson, founder of Blue Seven Content, said his agency doesn’t have a formal policy about AI-generated content in their deliverables. However, every single new client has asked about whether his writers use AI to create content and if so, how.

“Delving into the language of what counts as AI-generated can be tricky, particularly because the definition is kind of up in the air,” he said. “Does Grammarly count? It seems like everything has some kind of AI feature built in, and we could be walking into a trap by formalizing language right now.”

However, his agency has regular discussions with its writers about what’s expected, including using LLMs to create content, which Watson said is forbidden. He trusts his writers to follow the guidelines and no longer uses AI detection tools to check their work. “We used to, but not anymore. They are irreparably flawed,” he said. “While they may be able to give you a decent idea about whether or not someone is using AI, there are simply too many false positives to rely on it.”

Watson said he’s yet to encounter a client claiming deliverables were AI-produced. However, if the situation arises, he does his best to explain the limitations of AI content detectors without sounding defensive. “Non-writers don’t quite understand the dangers of relying on flawed AI detection tools,” he said. “Explaining it could come across as trying to hide something on my end. I try to educate clients who may not understand the implications of AI and the detectors.”

He and his business partner, Victoria Lozano, schedule meetings to assure clients their content is human-created. “We are not zeros and ones behind a screen, and neither are our writers,” he said. “We believe that adding in the human element, combined with regular and open communication with clients, helps them to trust that we have their best interests in mind.”

How can writers combat this AI content detector trend?

Educating clients about the inaccuracy and unreliability of AI detection tools is one of the most effective ways to combat this growing problem. Plenty of research exists that demonstrates AI content detectors frequently give false positives, flagging human-written content as AI-generated.

Even OpenAI admitted their AI detection tool was flawed – eventually pulling it from the market. The AI company’s admission provides compelling evidence to support writers’ dislike of the tools.

Another tactic is to suggest alternative evaluation methods to clients.

“Anybody who needs those tools to tell whether something was AI-generated, they obviously don’t know what they’re looking for,” said Mason.

Fact-checking and evaluating writing for style, tone, and expertise better determine human writing than AI content detectors.

Lastly, clients should address specific concerns. Be prepared to pinpoint what aspects of the content seem AI-generated to give the writer an opportunity to defend their work. Writers should be ready to provide evidence of their process to help clients feel more at ease, including:

- Outlines, drafts, and revision history.

- Time-stamped documents or screenshots of work in progress.

- Screen grabs or recordings of your work in progress.

Facing the fear of AI-generated content

Hewett said his best advice to organizations worried about writers using AI is to ask why they’re so concerned. In most cases, their fears are unfounded.

“If it’s for DNA reasons, it’s no different than if you outsourced to a contractor,” he said. “If you find out they violated your NDA, you go after them legally. If you’re worried about quality, set standards and measure whether the content meets them, regardless of whether the writer used AI to assist.”